'Think about that… twice': Tracking Coordinated Inauthentic Behaviour on Lithuanian social media

- Debunk.org

- Jun 9, 2022

- 18 min read

Updated: Oct 10, 2023

In view of the increasingly discussed problem of Coordinated Inauthentic Behaviour (CIB) and the widespread activity of bots on social media, artificial influence of the popularity of disinformation content and consequently harming public opinion, Debunk.org analysed a selection of suspicious posts on Facebook. This report is dedicated to one of them.

Summary

A case of coordinated Inauthentic behaviour (CIB) was spotted in early April on the Facebook page of Minfo.lt, a site known for spreading conspiracies and disinformation. The attack occurred a couple of days after the Bucha massacre and can be seen as an attempt to divert attention from the crimes committed by the Russian army In Ukraine.

A repost with a video from a Russian wellness guru gained 121 thousand interactions in total and was 444.4 times more popular than any other post on Minfo.

1622 comments (about 1/3) were written by 484 users and used a couple of the same Images or GIFs. Many were written by the same user in a short period of time.

Even though Minfo is dedicated to a Lithuanian audience, the majority of the comments under this post were in other languages.

The comments under the video in question spread one of the main Kremlin narratives: ‘Russia should not be blamed for this’, which is in line with the justification for Russia’s aggression against Ukraine.

In total the post was shared on public groups 145 times (sometimes it was shared more than once on the same group). Many groups originated from other countries than Lithuania, such as Russia, Ukraine, Moldova, Latvia, Kazakhstan, Azerbaijan, etc.

Minfo Facebook page

The video we suspect to be a part of an influence operation appeared on the known for spreading disinformation Facebook page Minfo, created to “fight censorship” on social media. It is social channel of Lithuanian ‘positive news’ website minfo.lt, run by “Fellowship of Maximalist Psychotherapy” (“Maksimalistinės psichoterapijos draugija, VšĮ”) headed by Marius Gabrilavičius (aka “Maksimalietis”) - a self-proclaimed psychotherapist, known for organising anti-vaccine protests in Lithuania and supporting QAnon conspiracy theory, according to fact-checkers of “ReBaltica”. As a journalist of fact-checking portal 15min.lt writes, in 2020 Mr. Gabrilavičius spent more money on ads promoting his posts than the largest media group in Lithuania (LRT), the European Parliament, the European Commission, Lithuanian political parties or commercial media.

It is worth mentioning that when a person, who does not exhibit any signs of considerable wealth, leads a website that employs more than 10 authors, and bought more on Facebook ads than the most spendy political party during the Lithuanian election campaign, it is at least strange. And considering that the content of Minfo.lt is openly pro-Russian, it raises reasonable doubts about the origin and purpose of these funds. Moreover, a few years ago, Mr. Gabrilavičius was interviewed by Lithuanian fact-checkers from online television channel Laisvės TV, where he said that he also receives income in foreign countries. At that time, journalists were suspicious about the exclusive favouritism of the authors of Minfo (self-proclaimed “positive news website”) towards the Lithuanian Peasants' and Greens' Union, which was leading the ruling coalition back then. Lithuanian argo-oligarch Ramūnas Karbauskis is the leader of that party and is well-known for his close business relations with Russian state and business figures. He is also known as one of the initiators to organise a popular referendum against Lithuania's accession to NATO back in 2002. Karbauskis is also linked to possibly financing a disinformation campaign in 2013-2014 against the investment of the American energy company Chevron in the exploration and exploitation of shale gas fields in Lithuania, thus preventing Lithuania from securing energy independence from Russia.

On 5th of April Minfo Facebook page posted a video of Artur Sita, a wide-known Russian influencer, well-being and mindfulness guru, who resides in Thailand and he gives lectures on self-fulfillment.

In the 3-minute-long excerpt from one of his lectures, Mr. Sita says: “If the people who are waging war now knew what they were doing it for, they would lay down their arms”. Later in the video, he rhetorically asks why peace talks are taking place behind closed doors and answers that it is not about peace at all, but about economics. He states that those who are fighting and killing each other do so because they do not know the truth, that the war is about money and not about ideas.

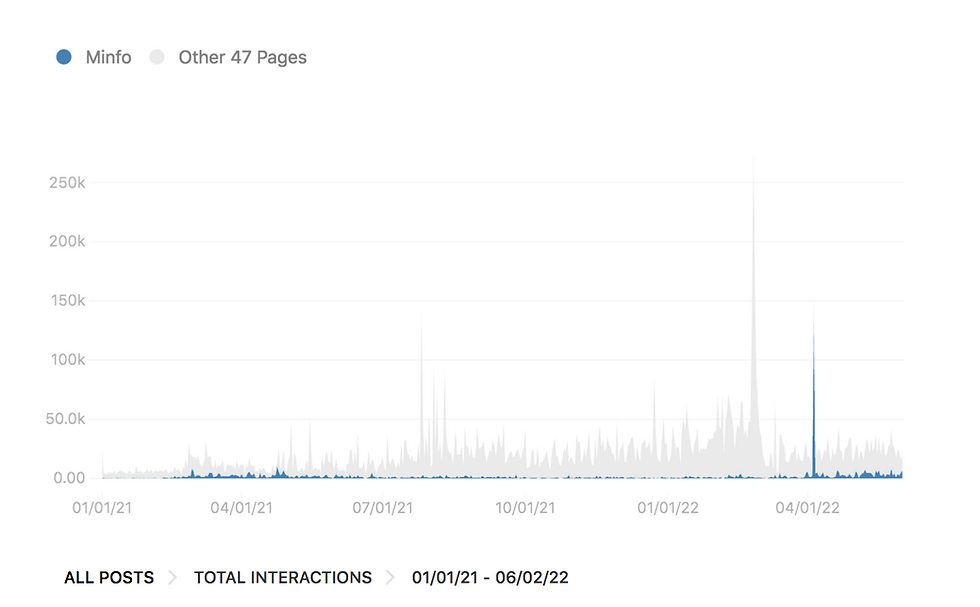

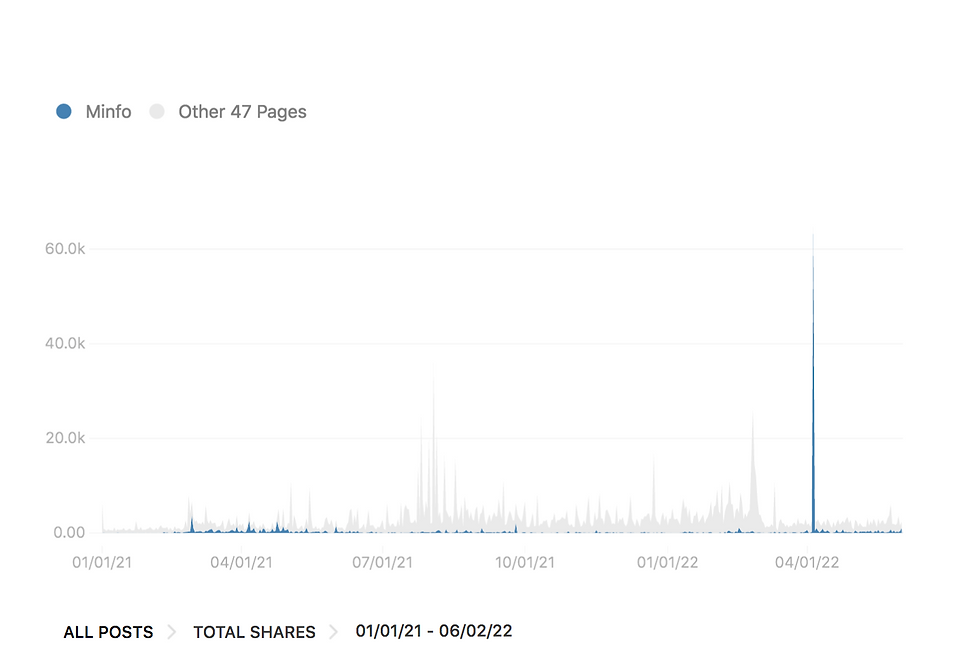

As mentioned, the video was published on the Minfo Facebook page on 5th of April, a few days after the world saw the visual evidence of Bucha massacre. The post gained 1.52 million views (3.11 million views, including views from shares), which is a very high engagement rate. What is more, the video triggered 57K likes, more than 59K shares and 4.5K comments (in total 121 thousand interactions). According to CrowdTangle data, the Minfo post has a third place in terms of most shares of all Lithuanian pages for the period 20.05.2021-20.05.2022. What is more, it was the only case of such a rapid increase in content published on Minfo and others monitored by Debunk.org Facebook pages over a period of 16 months. According to CrowdTangle data, this post was 444.4 times more popular than any other post on Minfo.

The disproportionate number of responses to a post is noticeable even better when compared to the number of shares from other Minfo posts, as shown on the graph below:

It is evident that the video triggered a massive number of reactions, even exceeding the number of shares of posts from other Facebook pages monitored by Debunk.org during the first days of Russia's invasion of Ukraine. It should be noted that since December 2020, Minfo has not purchased any Facebook Ads, which could potentially explain the rise of interactions.

Using data from CrowdTangle, we could analyse the history of the interactions with the post. Although in the first days after publishing the material gained limited attention, the situation changed rapidly on April 8, when the numbers of likes, shares, and comments skyrocketed. The surge in interactions continued until April 13, after which the curve began to flatten out.

Dynamics of likes, shares, and comments

It should be noted that the video is in Russian, and the post itself has only one word in Lithuanian “to think about” (“susimastymui”). As the vast majority of other posts and users’ comments on Minfo are in Lithuanian, such an enormous increase of interest in the post showing a Russian influencer speaking in his mother tongue seemed at least unusual. According to CrowdTangle data, in the period of 16 months Minfo Facebook page posted only two content pieces in Russian language. Also, to the best knowledge of DebunEU.org analysts, it is the only case in the history of Minfo Facebook page when a post triggered a massive Russian-language comments reaction.

Origin of the video

The video first appeared on Mr. Sita’s YouTube channel “Lessons of Life with Artur Sita” (“Уроки жизни с Артуром Сита”) on March 2, 2022, which is more than one month before it was published on Minfo Facebook page. The original video attracted an average interest - it has less than 40K views and less than 3K comments. However, taking into consideration that Mr. Sita’s YouTube channel has 120K subscribers, these numbers seem more organic than the sky-high numbers of reactions to the Minfo Facebook page, which has less than 50K of followers.

What is interesting, the version of the video published on the original YouTube channel is not the one posted on the Minfo Facebook page. The video posted on Facebook contains a characteristic title in a white background “He speaks truth. Think about that!” (“Он правду говорит. Задумайтесь!”). Using reverse image search it was possible to trace the origin of the clip in the version that was published on Minfo page. It was posted on another popular social media platform, TikTok, by a user @Aleksa369_ on April 1, 2022 (4 days before publishing on Minfo page).

It is worth mentioning that on this occasion the video aggregated an enormous number of reactions and views in comparison to the other posts by @Aleksa369_ - most of the clips have a couple of hundred or thousand views, while the video showing Mr. Sita reached 1.5 million views, with almost 70K likes and more than 3.2K comments, which might indicate that it has also been artificially “boosted” on the social media platform.

The owner of the account is a Russian-speaking woman supposedly living in Germany. It should be noted that since the creation of the account in October 2020, @Aleksa369_ posted content almost exclusively about animals. In March 2022 the number of videos increased but again covered almost exclusively animal content. However, since April 1, 2022 (when Mr. Sita’s video was shared), the user started posting a number of anti-USA propaganda video clips, for example one blaming the United States for the plans of genocide of the Russian, Belarusian, and Ukrainian nations. The examples of the content posted by @Aleksa369_ are shown below:

Few days after publishing the video showing Mr. Sita on TikTok, it appeared on Facebook. On April 4, 2022 (one day before publishing on Minfo) the video was uploaded on the page “Real Estate Agency Alliance” (“Агентство Недвижимости Альянс”) – it gained 55 views and sparked 3 likes (including one from the Facebook page owner).

The difference between this version of the video and the one posted on Minfo page is the presence of the TikTok watermark in the first case and longer duration of the clip – it is 9 seconds longer.

The appearance of the provocative video clip on the company’s Facebook page exclusively dedicated to real estate commerce is also an oddity, as it is the only case in the Facebook page history to publish information unrelated to its business (even during the Russian invasion the pattern did not change). The page is relatively new - it was created on April 25, 2021. According to openly available information, the “Real Estate Agency Alliance” has an office in Myrnohrad in Donetsk Oblast (Ukraine). The company had its website on the Ukrainian version of OLX portal – however, the link is no longer working.

The manager of a company, Tatyana Petrovna Bilichenko (Татьяна Петровна Биличенко), an Ukrainian lawyer, attached a link to her TikTok account (@rioritet), as well as YouTube channel and Instagram profile, on her Facebook profile. However, on May 17, 2022, when the analysis was conducted, some of the social media accounts were removed - Instagram and TikTok. A print screen has been taken the day before takedown of the content liked by Tatyana Bilichenko on TikTok showing that among others was also the video of Mr. Sita.

It can be added that the user was also found on two Russian social media sites: top-profile.com and top1000vk.com. On both sites different information is provided about her interests, but also more importantly about the user's company. In both cases the companies are located in Russia (in Moscow or near Voronezh). Some pictures posted on the user’s VK page were found on other social media profiles (example 1, example 2, example 3).

Although it is impossible to tell, whether Minfo page is linked to either TikTok account @Aleksa369_ or Facebook user Tatyana Petrovna Bilichenko, the close proximity of the timestamps between the three entities sharing video of Mr. Sita seems worth pointing out.

Inauthentic behaviour in the comments

Since being posted on Minfo Facebook page on April 5th, the video has received around 4500 comments. Throughout April 5-7, there were 227 comments (which is still relatively high), but the highest number of comments came on the following two days – April 8 (1467 comments) and April 9 (1104 comments). More than half of all comments appeared under the post during those two days (it is worth mentioning that new ones were still being added at the time of the analysis). It is also worth noting that 1622 comments (about 1/3) were written by 484 users.

Detailed analysis of the comments and those who wrote them allowed us to observe suspicious accounts and their activity. The first thing visible when viewing the statements under the video were that the users agree with the message in it: "100%", “He is right", "It is true", etc. The support was expressed both verbally and through various gifs and emoticons. The images were very often repeated – they were exactly the same or very similar. Around 400 comments consisting of images were recorded as suspicious.

During the analysis, various languages were found among the comments, including Lithuanian, Russian, Moldavian, Georgian, Armenian, and Ukrainian, which is also somewhat unusual given that Minfo is only a small Lithuanian Facebook page. Moreover, most comments were written in languages other than Lithuanian – mainly in Russian. Probably it was because the problematic video was published in Russian. However, as mentioned, the Minfo page is dedicated to Lithuanians and very rarely publishes content in other languages, which raises suspicions about enormous engagement of non-Lithuanian Facebook users.

During the analysis, selected profiles were examined in detail. Some of the accounts seemed suspicious, considering the context, activity, and unnatural behaviour of the owners. For example, the video was commented on 5 times within 46 seconds from the account of Igor Sergeyevich.

Another account, Fey Grigoryevich (also locked), published 5 comments within 29 seconds, in which the content was limited to tagging selected people. The record-breaking account in terms of number and time was the profile of Volodymyr Pidhoretskyi (also locked, only profile photo visible), who commented on the video 9 times within 21 seconds.

Many other accounts left comments with an emoticon or gif only. Most often it was a single activity, sometimes 2-4 times in a short period of time (e.g., Irina Loyko, whose profile is limited to the main picture, commented on the post 4 times within 9 seconds, and left a total of 8 comments in a very short period of time).

The profiles which commented under the video on Minfo were locked to non-friends, with or without a profile picture, or limited to a few posts and pictures – often repeated several times. The following examples present other locked (or with hidden content) accounts:

An interesting case was the account of Lena Brendel, who has one friend, one profile picture (of a cake) and one post with this picture (published in 2020). There is no activity on the profile nor posts, yet the owner decided to add a quite elaborate comment under a video clip on the Minfo Facebook page: “👍🏻👍🏻👍🏻 After February 24 I reviewed a bunch of political scientists, economists, statisticians and came to the same conclusion. If you are called upon to kill someone for an idea, it means they want to make money with your hands. Turn around and walk away. Don't bring trouble to ordinary people.”

Another account, Tatiana Kulak, in general is also not very active, mostly sharing few pictures of flowers per year (sometimes the same one several times). The profile has no friends. The account left a comment: "Hahaha. You pay taxes on the profits you make. Start your own empire... And don't pay.... Of course, you're right to some extent... But you always have a choice... Be a bum.... Free and pay no taxes..."

In many analysed cases, the profiles were just limited to a few photos, sometimes published in small intervals (in 1-2 days) or, on the contrary, once a year on average. Below are examples of Facebook walls containing all the posts of such accounts.

One sign of inauthentic behaviour on social media is the unusual regularity of certain features. For this analysis, the interval between each pair of comments was measured to detect possible patterns of automated behaviour. A comparison of obtained intervals showed that of all 4469 comments, many were published during the same gap two or more times. According to the calculations, only 1158 unique intervals were obtained that occurred between the comments. Thus, as many as 3311 intervals constituted repetitions. Although repetitions are natural and it is impossible for them not to occur, their accumulation is puzzling. Since on April 8-9 the highest number of comments was observed, those days also had the most interval repeats. However, what caught analysts' attention was the high number of repetitions of very small-time gaps between the comments, f.ex. on April 8, as many as 1112 comments appeared in intervals of up to 60 seconds.

Finally, out of those profiles that left comments under the Minfo post, we looked at 300 accounts. Due to the very high number of comments (1467), the snippet reviewed represents only a small percentage, but this already allowed a clear trend to be observed. Accounts were reviewed sequentially, according to the time the comment was added, and potentially suspicious profile design was assessed. Accounts hidden to external observers or those with only a few posts/repeating photos/images and no activity were considered suspicious. Out of the analysed profiles, 27% were accounts locked to others, and in total 58% of accounts were considered suspicious.

It seems that even from a statistical point of view, there should be more “regular” accounts in the analysed sample. Of course, it would require extremely detailed analysis to establish that the reviewed accounts do not belong to real users but to bots. In fact, it is virtually impossible to prove this. However, the accumulation of suspicious accounts commenting in quick succession on a not-so-popular video by a Russian influencer (ostensibly unbiased but supposedly thought-provoking about the war), posted on a relatively small Lithuanian group the day after the world learned of the Bucha massacre, seems telling, providing another indication that the popularity of the post was not natural.

Inauthentic shares

Out of all 59K shares, Debunk.org analysts reviewed more than 15K. The analysis revealed that 2285 shares were done by accounts, which had shared problematic post more than once.

Using the CrowdTangle platform it was possible to find all 130 public groups on which Minfo posts were shared. Then these groups were divided by the country of origin [1]. Nearly half of the groups on which Minfo post was shared was Ukrainian (54), while the number of the groups located in Lithuania (24) was similar to the number of groups located in Russia (25). Also, a considerable number of groups are located in Moldova (15). Other countries where the post was shared were Armenia (1), Azerbaijan (2), Italy (1), Kazakhstan (3), Latvia (2), Romania (1) and Tajikistan (1). Taking into consideration that the original post was published on a Lithuanian page, and Lithuanian groups constitute only 18.5% of the total number of groups on which it was shared, the disproportion is significant and seemingly inorganic.

Most of the groups were dedicated to different cities (e.g., “Наш Павлоград”, “Любимая Кирилловка”, “Любимый Мелитополь”), occupations (e.g., “Группа «Опікункив Німеччині. Все буде Україна!!!»”, “Работа в Польше”), hobbies (e.g., “ВСЁ О РЫБАЛКЕ”, “MUZICĂ DE CALITATE 🎤🎧🔊🔊”) or diasporas (e.g., "RUSSIAN Aberdeen, Peterhead, Fraserburg SCOTLAND"), announcements (e.g., “Peterborough lietuviu turgelis [skelbimai]”). Therefore, it could be stated that apparently many groups on which the problematic video was shared did not match the narrative of the Minfo post.

In fact, only a minority of groups seem have similar profile as Minfo (i.e. anti-government, spreading disinformation and conspiracies), of which the majority belong to the Lithuanian social media sphere and are known for far-right or pro-Russian sentiment (e.g., “(NEBE)TYLOS PROTESTO grupė”, “Vardan išlikimo!”, “ŽAKO FRESKO MOKINIAI”, “KLAIPĖDOS LŠS – Didysis šeimos gynimo maršas 2021”, “Mindaugo Puidoko palaikymo grupė”). Although in the case of the Lithuanian social media sphere the pattern of sharing Minfo post on different suspicious groups seems more organic, the fact that it was spread mainly on groups not attached to Lithuania and not thematically linked with Minfo usual content seems to indicate, that the material was shared according to a Coordinated Inauthentic Behaviour pattern. Closer inspection of groups revealed that in fact some of them may professionally specialise in artificial "inflating" of social media audiences.

In total the post was shared on public groups 145 times (sometimes it was shared more than once on the same group). During first three days after publication, the post was shared only 10 times, almost exclusively on groups dedicated to Lithuanian diaspora (e.g., “Rytų Londono Lietuvių Turgus”), news (e.g., “Šiandienos Aktualijos”) or known for sharing disinformation (e.g. “Žakas Fresko Grupė”). The only non-Lithuanian group on which the post was then shared was a group dedicated to the Russian community in Scotland (“RUSSIAN Aberdeen, Peterhead, Fraserburg SCOTLAND”). However, during April 8-9, the Minfo post was massively shared on various Russian, Ukrainian, Moldavian, Latvian, Kazakh, Azerbaijani etc. groups, constituting nearly half (62) of all shares on public groups.

This period coincides with the time of the general increase of interactions with post. Although it is natural that more shares generate increase in reactions, the way the post was shared in some cases leaves no doubt that this was an artificial act. Below are examples of the most suspicious shares of Minfo post.

The record-breaking profile was account of Rasim Gudulov, who shared the video as many as 50 times in just two minutes on his profile page. Although at first glance the profile appears to belong to a real person - the owner has posted several family photos - upon a closer inspection many elements seem suspicious. First of all, the account shares a lot of other posts in sequences in very short periods of time (some of them were labelled as disinformation by independent fact-checkers). Moreover, the account has over 4.5 thousand friends, but despite such a large number, the posts shared by him are hardly ever followed or interacted with. Although the account does not seem suspicious on the first glance, it is most probablt a bot or an account operated in an automated manner.

Although it is impossible to determine with 100% certainty whether a Facebook account is fake, also in other cases closer inspection revealed that accounts were most probably used by owners as sock puppets or bots used for the purpose of spreading disinformation content. The exemples of evidence of manipulation or automation include a different name of the user than in URL, sharing multiple content pieces with the same title, multiple replies to non-existent comments or using images from the Internet as profile pictures.

Other indicators of suspicious account behaviour which are also worth mentioning include mismatched language of the target group and the share’s title. For example, one account shared the Minfo post on the Lithuanian group “(NEBE)TYLOS PROTESTO grupė” with a Spanish title “He pensado que al grupo le gustaría esto” (“I thought the group would like this”). On 9th of April this account shared Minfo post 29 times, while the majority of shares occurred in less than 3 minutes (sometimes 5 seconds elapsed between consecutive shares).

It is worth noting that with regards to the Minfo post, the title “I thought the group would like this” was used in a repetitive pattern and occurred on several occasions, in different languages, like Russian (“Думаю, это понравится участникам группы”) or Lithuanian (“Pamaniau, kad grupei tai gali patikti”).

During further analysis it was noted that the same phrase was often used accounts sharing disinformation posts about war in Ukraine or far-right movement (example 1, example 2, example 3, example 4).

In general, out of 89 analysed accounts which shared the Minfo post on public groups, the vast majority seems suspicious. Many accounts show indications of being fake or/and automated.

Conclusions

Although the video of the Russian guru of mindfulness may seem harmless, it is quite purposefully adapted to the timing of the political context and the Kremlin’s disinformation metanarrative, and thus has an influence operation function. One of the key messages of the video content, ‘Russia should not be blamed for this’, is in line with the justification for Russia’s aggression against Ukraine. The focus should not be on individual events, but rather on the overall context – that neither the war nor its atrocities would have taken place if it were not for the destructive drive of the US to aggressively seize the Kremlin’s rightful historical possessions.

According to this meta-narrative, Ukraine is supposedly a fundamentally failed state, without any separate consciousness or identity, ruled by a supposedly criminal regime financed by money from the West, especially the US. The Ukrainians are in fact misled Russians, who, due to unfortunate circumstances and strong malicious pressure from the West, are trying to artificially construct a political identity, even though the ordinary people actively oppose and sabotage such actions. It supposedly secretly understands that it is impossible to separate itself from what it truly belongs to historically, namely the ‘Great Russian nation’, which was always led by the rulers of the Kremlin who inherited this right directly from God.

At that time (early April), the images of the Bucha massacre, which were spread around the world, became a scourge in the Kremlin’s narrative of the friendship between the Ukrainian and Russian peoples, and undermined the image of the Kremlin’s benevolent patronage. Various information channels have been used to try to blunt this negative impact. One of them was the analysed video. It should be noted that, although the Minfo page is aimed at a Lithuanian audience, the language of the video’s content and most of the profiles that actively shared and liked it were aimed at a Russian-speaking audience. Especially given that the Bucha massacre may have had the greatest negative impact on the Kremlin’s image for Ukrainian and even Russian audiences.

As for the rest of the, especially Western, audience, it can be said that the video appealed to their belief in the 'truth' (or lack thereof). The main claims of Russian mindfulness guru are supportive to the ontological narrative skeptical towards the truth in general and can be put into frames like ‘there is no truth’, ‘truth always lies somewhere in the middle’, ‘we will never know the real truth’, etc. People sharing this type of conviction may think that although ‘human’ truth can be achieved, known and researched, the 'real' ('higher', ‘transcendent’) truth, which belongs to the f.ex. sphere of religion, politics or mass media, is so sophisticated that it is beyond human capacity to comprehend. Therefore, every explanation provided by the official media must be a lie, because the ‘real truth’ is veiled.

Thus, it is easier to persuade such a person, that we do not know the truth about war in Ukraine, as official media lie, and the truth is somewhere in the middle of two narratives (Western and Russian). And if it so, it is more convincing that the war is in fact fought by US against Russia, which justifies blaming also US for waging the war. In this way, the seed of uncertainty can later grow into a "useful idiot" who, in the name of his ontological independence, will defend the idea of the "golden mean" on and off social media, not knowing that in doing so he is serving Russia in the information war. Such a manipulation is one of the disinformation techniques commonly used by the Kremlin, which implies its own vision of ‘truth’ which in fact is also a part of parallel reality. The aim of such an influence operation would be muddling real responsibility for war crimes, and on the other hand shaping the image of them as necessary sacrificies in the Russian holy war against the Western “Evil Empire”.

Another very important point is that these statements denying the existence of truth and encouraging concern for one’s own good rather than the common good stimulates the deep beliefs inherent in the criminal consciousness, which is universal and can be found in all nations of the world. However, it should be emphasised here that, unlike in many other countries, especially in the West, the criminal worldview is very niche and fundamentally opposed to a pro-state order worldview. By contrast, in parts of the societies of the former USSR empire (later the CIS), and especially in Russia, the criminal worldview is not only not opposed to the pro-statist view, but on the contrary, it is identical with it. This is because both the USSR and its satellites, as well as the current Kremlin and its allies, are ruled by the figures of the criminal world, which instils and maintains a criminal worldview in their own people minds. Therefore, looking at (a) the political context; (b) the audience to whom the video was addressed; and (c) the main message conveyed by its content, it can be assumed that this was a well-considered information attack to salvage the crumbling remnants of the Kremlin’s image, possibly through a Coordinated Inauthentic Behaviour pattern.

Finally, this report shows that there is a need for further investigation on Coordinated Inauthentic Behaviour. Especially, using more available tools and opportunities to extract and analyse data in order to gather more evidence of CIB network existence as well as to help detect other networks, expose them and prevent or make it more difficult for them to conduct influence operations via social media.

Notes

[1] Country of origin was determined based on the information provided in the section “About” for every group. If information about location was not provided, it was determined either by the admins’ country of living or the language in which posts were usually published.